Test flakiness is a big issue. Not only can it be a colossal time investment to detect and fix, but it hurts perhaps the biggest value you get from your tests—their trustworthiness. A test you cannot trust is a useless test. Time spent maintaining a useless test is time wasted.

I've already shared with you a practical guide on addressing flaky tests, but today I'd like to talk about what it all starts from. Detecting flakiness.

The roots

One thing I learned over the years of testing is that flakiness can hide its roots in every layer of your system. From the way you write the test to the test setup and the tools you're using. Even in the hardware that runs all of that! And the more layers your test involves, the easier it is for the flakiness slip through.

It's not a coincidence that end-to-end tests are notoriously known to be flaky. There are a lot of things at play between the two ends. Add to that a generally higher cost of maintenance, and you've got yourself a problem nobody in the team wants to deal with.

The worst thing of all is that the sporadic nature of flakiness makes it compellingly easy to brush off an ocassionally failed test. When so many layers are involved, who knows, perhaps it was a hiccup somewhere down the line. Just hit the "Rerun" button, see the test passing, and move on with your day.

Sadly, that is how most teams approach flakiness at its early stage. Ignore it once, then twice, and, suddenly, you are gambling on the CI results of your product, pulling those reruns just to see it turn green.

I've talked with teams that have given up on the whole idea of E2E testing because of how unreliable their test suites eventually became.

That's now how automated tests are supposed to work.

Catching the flakes

In larger teams, flakiness can slip through your fingers in no time. You may not be the one experiencing it, or rerunning the tests, or being affected by it in any way. Until you are. It is rather nasty to find yourself in a situation when an important pull request is stalled until you win the rerun game. I've been there. That's why I put a ton of effort into keeping my builds green at all time, eradicating flakiness as soon as it surfaces.

It is all the more useful to have tools by your side to help you detect and track flaky tests across your product.

Recently, Sentry has announced its Test Analytics and I wanted to try it straight away. And the best way to try is to reproduce one of the common mistakes that leads to flakiness and see how it would help me catch and fix it.

The test

Here I've got a basic in-browser test using Vitest Browser Mode:

1import { render } from 'vitest-browser-react'2import { screen } from '@testing-library/react'3import { worker } from '../src/mocks/browser.js'4import App from '../src/App.jsx'56beforeAll(() => worker.start())7afterAll(() => worker.stop())89function sleep(duration: number) {10 return new Promise((resolve) => {11 setTimeout(resolve, duration)12 })13}1415it('renders the list of posts', async () => {16 render(<App />)1718 await sleep(250)19 const posts = screen.getAllByRole('listitem')2021 expect(posts).toEqual([22 expect.toHaveTextContent('First post'),23 expect.toHaveTextContent('Second post'),24 ])25})

This one concerns itself with testing the <App /> component that renders a list of user's posts. Fairly simple. For those sharp of eye, you've likely already spied the problem with this test, but let's imagine I didn't. I wrote it, ran it, saw it successful, got the changes approved, and now it runs on main for everyone in my team.

Let's see how the experience will look like for me (and my teammates) if I've got test analytics set up to track flaky tests.

Getting started with Test Analytics

Test Analytics is a free feature that can be used standalone or as a part of Codecov (if you've used it before). You can integrate it in your project in three steps.

Step 1: Install Codecov app

For starters, make sure you have the Codecov app installed on GitHub and authorized for the repositories you want to add Test Analytics to.

- Click here to install the Codecov app on GitHub.

Step 2: Configure Vitest

Test Analytics works by parsing the results of your test run in JUnit format (don't worry, you don't have to actually use JUnit!). I use Vitest in my project, and luckily it supports emitting the test report in that format.

Provide junit as a test reported to Vitest in vitest.config.ts:

1// vitest.config.ts2import { defineConfig } from 'vitest/config'34export default defineConfig({5 test: {6 reporters: ['default', 'junit'],7 outputFile: './test-report.junit.xml',8 },9})

I've also added the

defaultreporter so I can still see the test output in my terminal like I'm used to.

And yes, Vitest supports different reporters and code coverage in the Browser Mode too!

Step 3: Upload test reports

The only thing left to do is to run automated tests on CI and upload the generated report to Codecov. I will use GitHub Actions for that.

1name: ci23on:4 push:5 branches: [main]6 pull_request:7 workflow_dispatch:89jobs:10 test:11 runs-on: ubuntu-latest12 steps:13 - name: Checkout14 use: actions/checkout@v41516 - name: Install dependencies17 run: npm install1819 - name: Install browsers20 run: npx playwright install chromium2122 - name: Run tests23 run: vitest2425 - name: Upload test results26 # Always upload the test results because27 # we need to analyze failed test runs!28 if: ${{ !cancelled() }}29 use: codecov/test-results-action@v130 with:31 token: ${{ secrets.CODECOV_TOKEN }}

Learn how to create the

CODECOV_TOKENhere.

Notice that the upload action is marked to run always (${{ !cancelled() }}) so we could analyze the failed test runs as well.

Reading the analytics

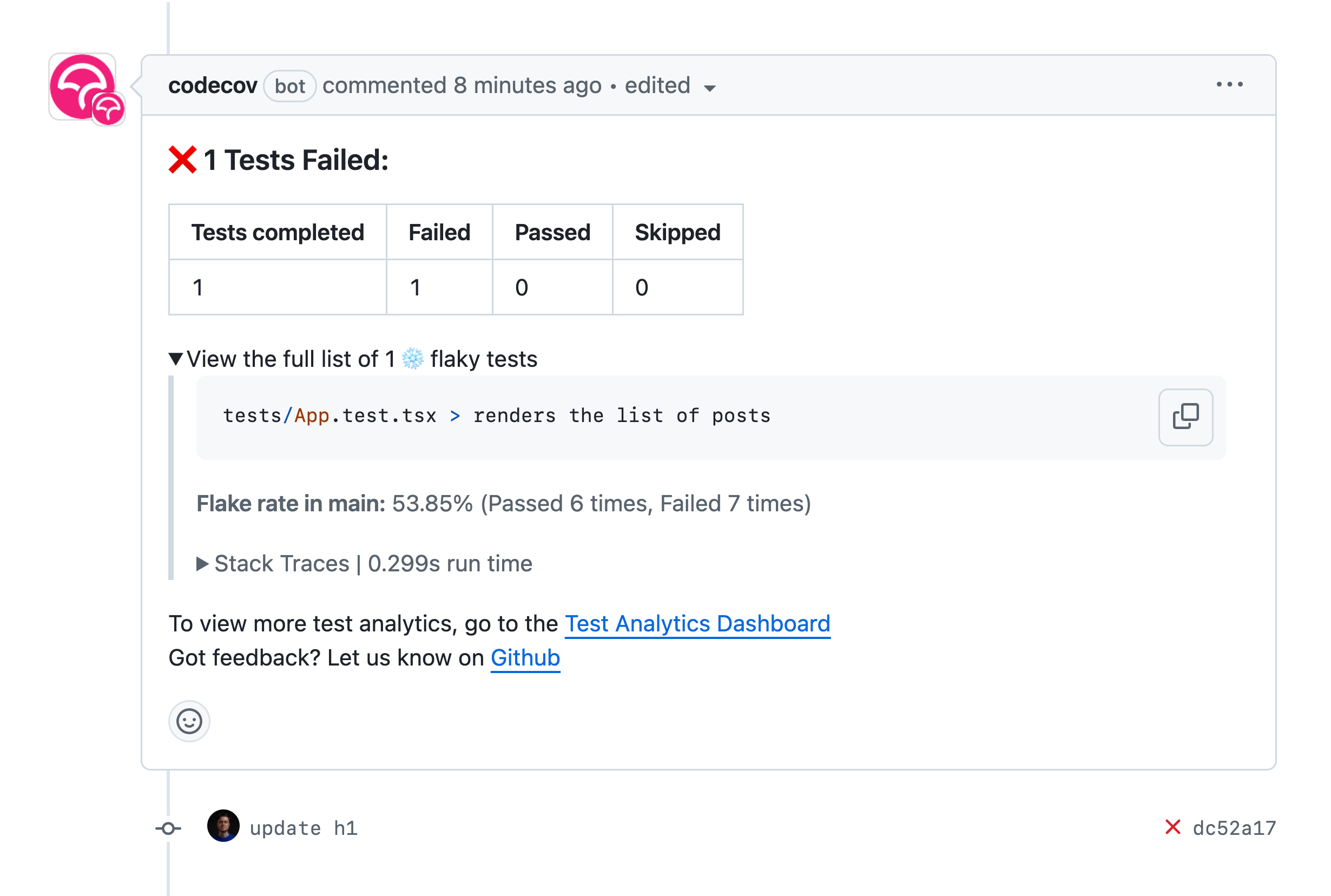

Once the setup is done, you will start getting reports in your pull requests highlighting overall test results and also any detected flakiness.

Once someone encounters the flakiness coming from my test, they will see an immediate report from Codecov right under the pull request:

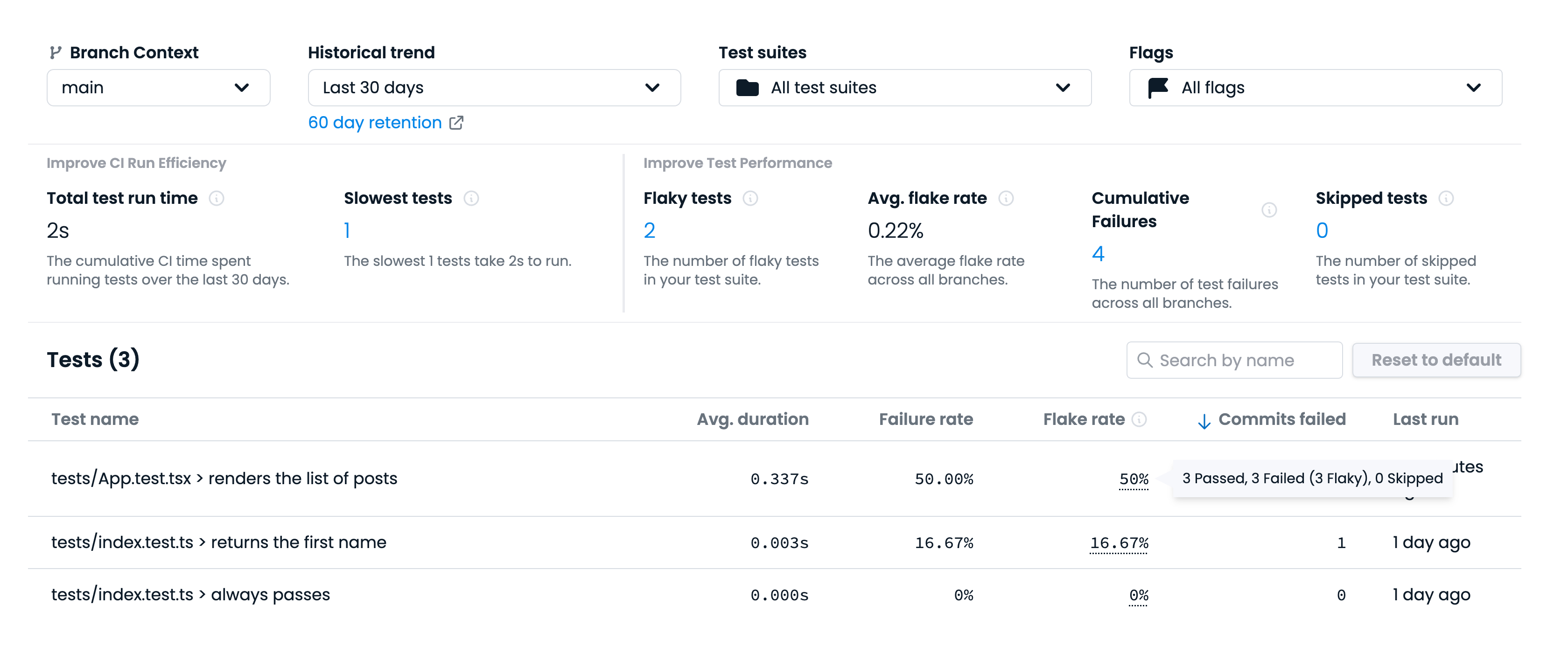

A more detailed report is available on Codecov. Open your repository and navigate to the "Tests" tab that will contain the Test Analytics Dashboard.

Here's how the test anlytics report looks like on the main branch:

I can see straight away that the test I've introduced has been flaky on main ("renders the list of posts"). That won't do!

There's also a lot of other metrics on display here, but the most useful criteria for me are:

- Flaky tests, the list of unreliable tests I have;

- Slowest tests, the list of tests that take most time to run;

- Average flake rate, to help me monitor my efforts as I go and fix flaky tests across the entire project.

With the way to track that problematic test, it's time for me to fix it for good.

Fixing the test

Normally, I would start from skipping the flaky test from the test suite entirely. But in this case, I already know the root cause so I can propose a fix straight away.

The reason this test is flaky hides in plain sight:

await sleep(250) // ❌

Using sleep in test is a terrible idea. Your intention is never to wait for a certain time but rather to wait for a certain state.

In the <App /> test case, that state is when the user posts response comes in and the list of posts renders in the UI. I need to rewrite the test to reflect just that:

1-const posts = screen.getAllByRole('listitem')2-await sleep(250)34+const posts = await screen.findAllByRole('listitem')

By swapping the getAllByRole query with the findAllByRole I am introducing a promise that will resolve once the list items are present on the page. Lastly, I await that promise, making my test resilient to how long it takes to fetch the data.

And with that, the flake is gone! 🎉

Full example

You can find a full example of using Test Analytics with Vitest on GitHub:

kettanaito/test-analytics-vitest